From Verification to Explanation

Designing an LLM-Based System for Claim Detection and Transparent Reasoning

Large Language Models · Fact-Checking · Explainable AI · Transparency · Decision Support

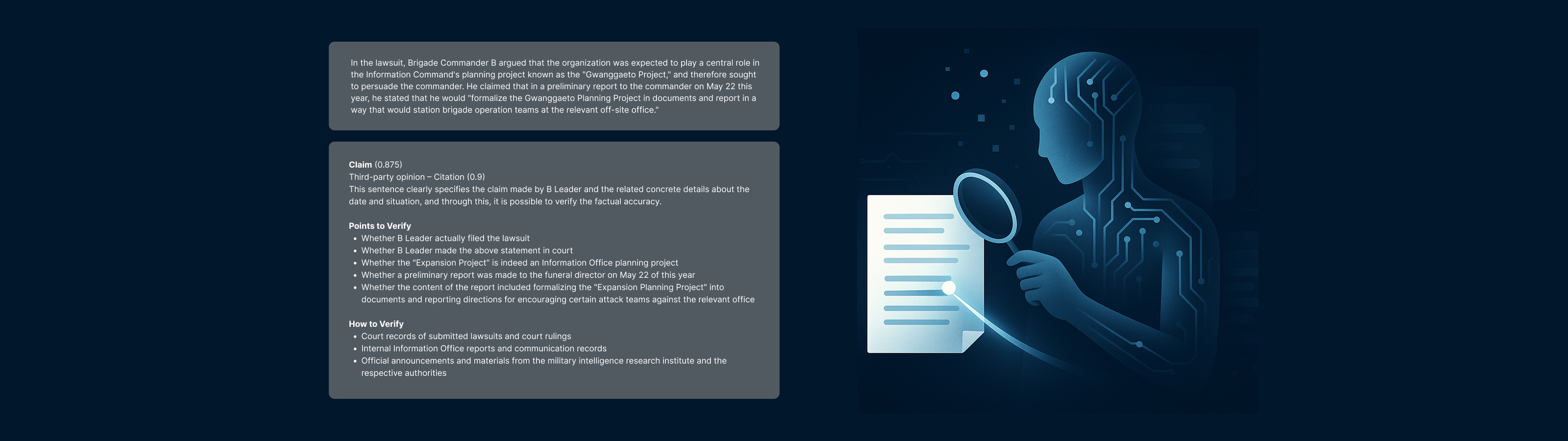

How can we automate fact verification without turning AI into a black box? This project started with the question of whether LLMs could automatically flag claims requiring verification. Initially, the goal was to produce binary outputs ("verifiable" or "not verifiable"). However, through iterative development and internal testing, we found that users would distrust opaque decisions. The system evolved into a decision-support tool that emphasizes reasoning and evidence over simple answers.

Project Overview

Fact-checkers and journalists face growing overload as misinformation spreads, yet existing tools often overwhelm them with volume or offer little transparency. This project designed an LLM-based pipeline that detects verifiable claims in text and provides supporting evidence along with reasoning traces. Instead of replacing human judgment, the system was built to augment it — helping users make better-informed decisions with interpretable outputs.

Approach

We began with requirement analysis to identify needs around claim detection and explainability. Based on this, we developed an initial pipeline combining LLM-based claim detection with evidence retrieval. The system was refined through eight cycles of internal usability testing by team members. Each iteration involved testing outputs, logging usability pain points, and revising prompts and user experience. Over time, the system shifted from delivering binary verdicts to providing explanatory support, positioning the AI as a judgment-support agent rather than a final arbiter.

Results & Contributions

- Shift from answers to support. Internal testers preferred reasoning-based explanations over binary classifications, shaping the UX direction.

- Improved accuracy across iterations. Claim detection performance increased from 0.61 to 0.96 as the pipeline was refined.

- Enhanced transparency with reasoning traces. Added explanatory outputs allowed users to follow the AI's decision path.

More Projects

Becoming the Villain

How Role and Immersion Modality Shape Perspective-Taking in Immersive Role-Play

Clarifying or Complicating?

Understanding Older Adults' Engagement with Real-World XAI in E-Commerce

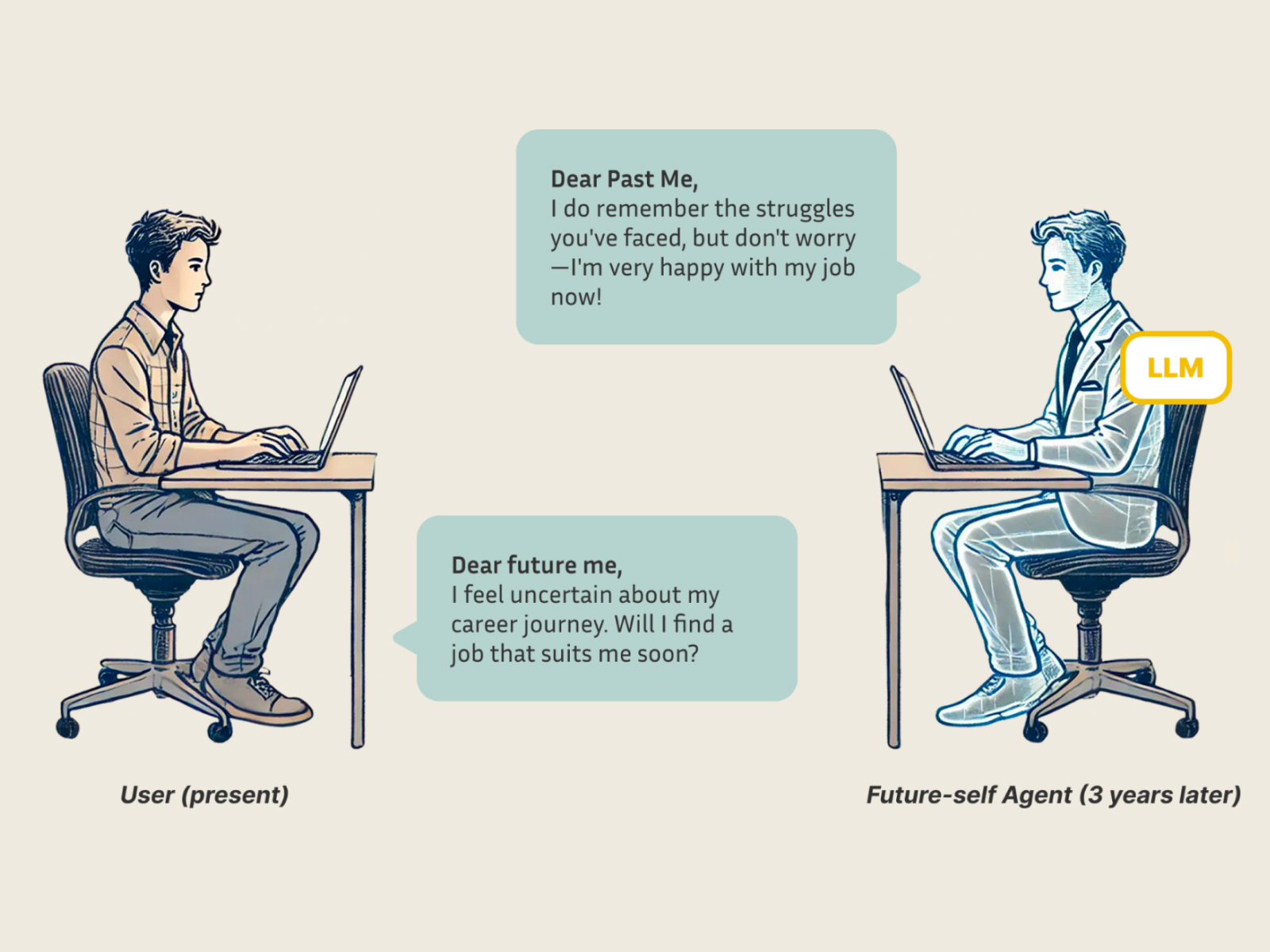

Letters from Future Self

Augmenting the Letter-Exchange Exercise with LLM-Based Agents to Enhance Young Adults' Career Exploration

SPeCtrum

A Grounded Framework for Multidimensional Identity Representation in LLM-Based Agents

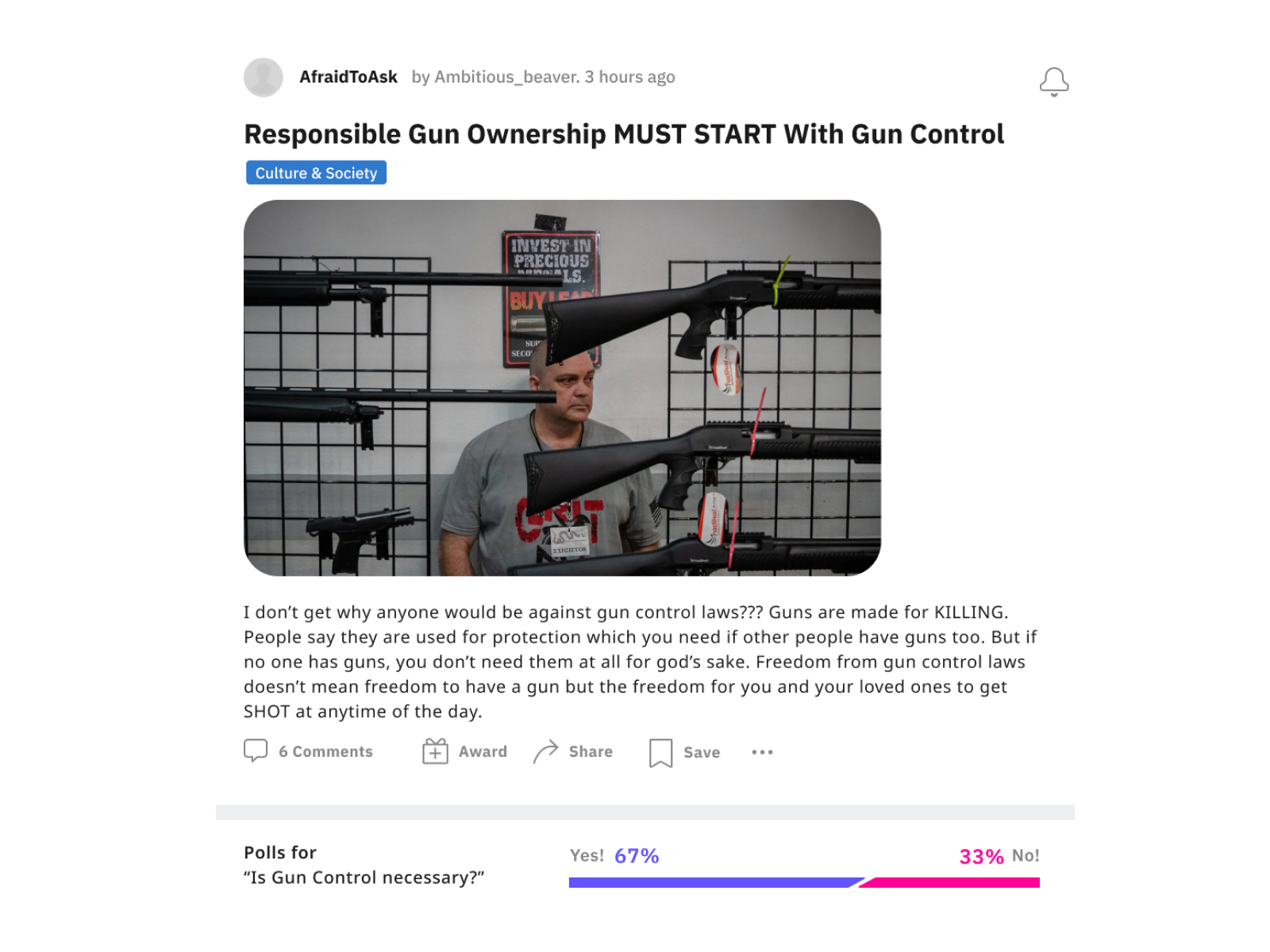

AI-Mediated Communication for Civil Online Discussion

Can AI Motivate People to Rewrite Their Own Comments?

DiVRsity

Design and Development of a Group Role-Play VR Platform for Disability Awareness Education