Clarifying or Complicating?

Understanding Older Adults' Engagement with Real-World XAI in E-Commerce

Explainable AI (XAI) · Recommender Systems · Older Adults · Trust & Agency · Inclusive Design

What happens when AI explanations meant to build trust instead leave people confused — or even feeling watched? Older adults are increasingly active in digital marketplaces, yet most explainable AI (XAI) research focuses on younger, tech-savvy users. This project explored how seniors engage with explainability features in NAVER Shopping, South Korea's largest e-commerce platform.

Project Overview

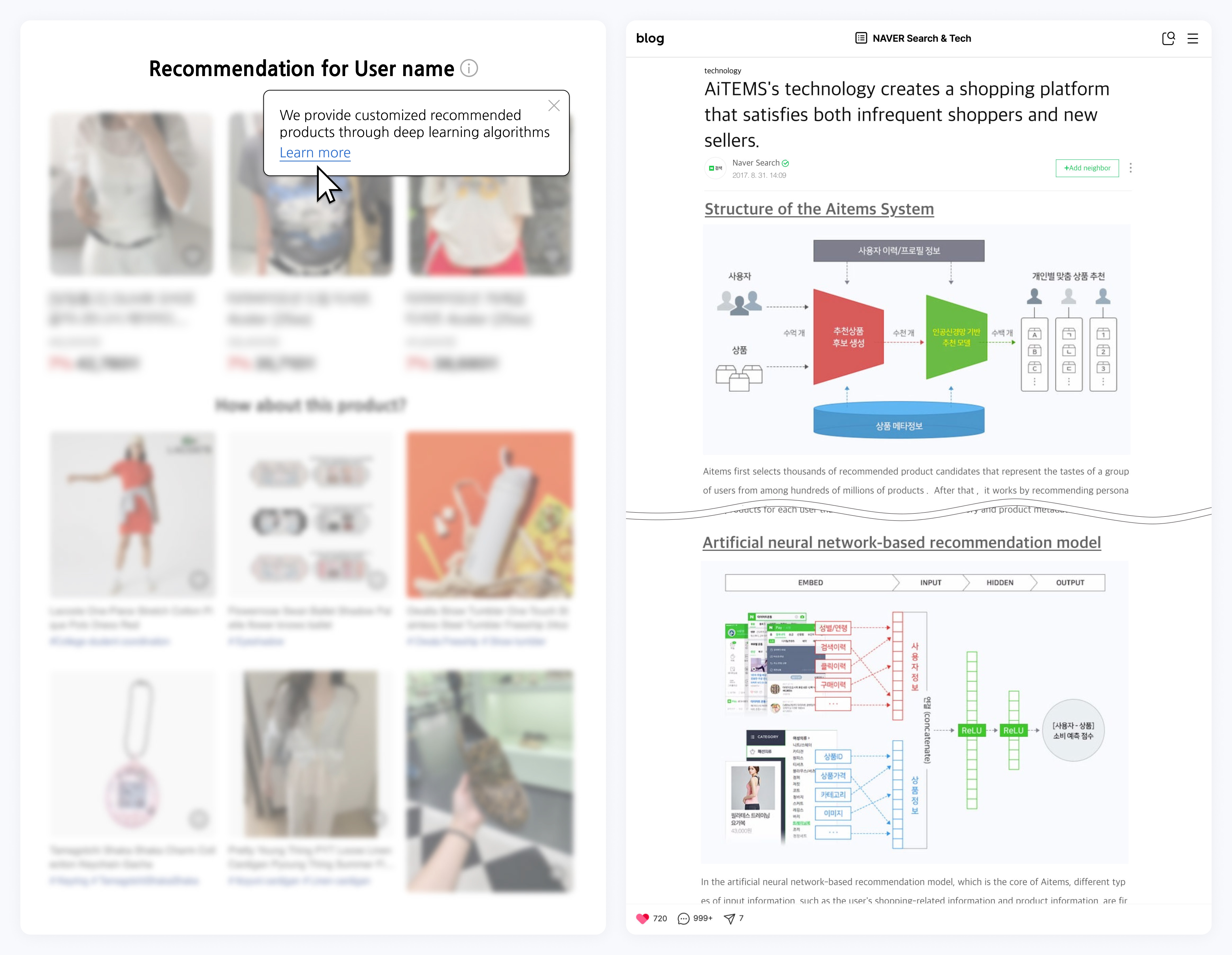

This project examined how older adults interact with explainable recommender systems in the live environment of NAVER Shopping. We focused on three deployed explanation types — global (system-level descriptions), local (item-level rationales), and user-model dashboards (editable preference profiles). NAVER Shopping was selected as the study site because it represents one of the few real-world deployments of explainable recommender features, offering a unique opportunity to investigate XAI in everyday use rather than in controlled prototypes.

Approach

We conducted a two-phase qualitative study with 20 older adults (60+) who regularly shopped online. In Session 1, participants shared their existing perceptions and prior experiences with NAVER's recommender systems and personalized recommendations. In Session 2, they interacted directly with NAVER's live XAI features through think-aloud protocols. All sessions were recorded, transcribed, and thematically analyzed to capture both convergence and divergence in how participants interpreted the explanations.

Results & Contributions

- Recommendations were often invisible—or treated as advertising. Personalized recommendations and XAI cues were frequently overlooked; even when noticed, they were sometimes dismissed as promotions rather than recognized as personalized support.

- Global explanations raised awareness but polarized trust. System-level descriptions helped some participants understand personalization and trust the system's authority, while others read them as persuasive marketing copy and became skeptical.

- Behavior-grounded local rationales enabled actionable evaluation. Item-level reasons helped participants quickly judge relevance; even skeptical users recalibrated trust when rationales aligned with their own behavior.

- User-model dashboards offered control while surfacing surveillance boundaries. Dashboards increased understanding and perceived agency, but also triggered discomfort and anxiety about how much personal activity was visible and accumulated.

- We translate these tensions into a diagnostic lens and concrete design strategies. We frame awareness, evaluation, and transparency tensions as actionable opportunities (e.g., visually separating personalization from ads, framing explanations around users' behaviors, and designing dashboards with clearer control and comfort boundaries).

More Projects

Becoming the Villain

How Role and Immersion Modality Shape Perspective-Taking in Immersive Role-Play

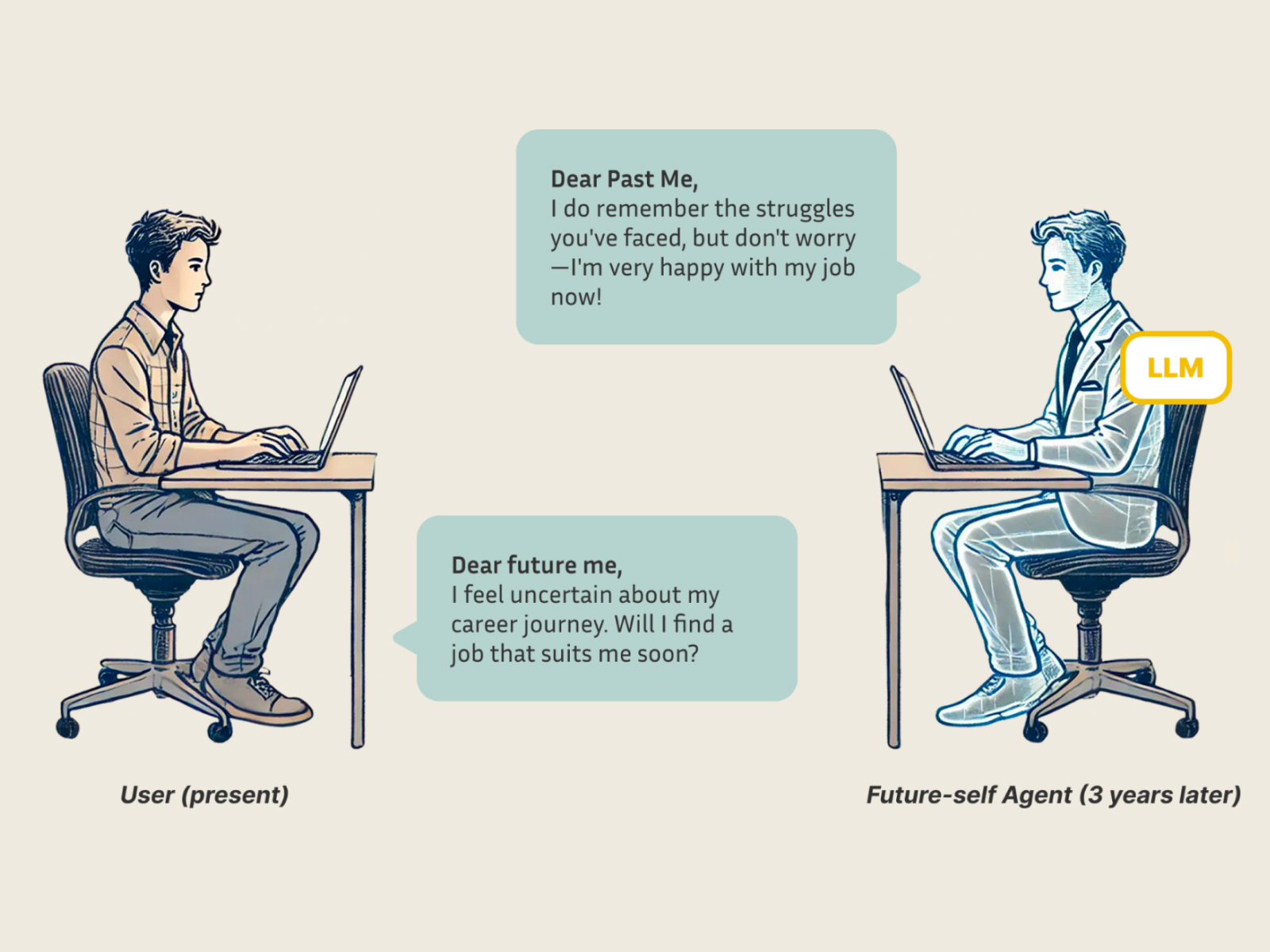

Letters from Future Self

Augmenting the Letter-Exchange Exercise with LLM-Based Agents to Enhance Young Adults' Career Exploration

SPeCtrum

A Grounded Framework for Multidimensional Identity Representation in LLM-Based Agents

AI-Mediated Communication for Civil Online Discussion

Can AI Motivate People to Rewrite Their Own Comments?

DiVRsity

Design and Development of a Group Role-Play VR Platform for Disability Awareness Education

From Verification to Explanation

Designing an LLM-Based System for Claim Detection and Transparent Reasoning