AI-Mediated Communication for Civil Online Discussion

Can AI Motivate People to Rewrite Their Own Comments?

AI-Mediated Communication · Online Discourse · Conflict Resolution · Self-Moderation · AI Feedback

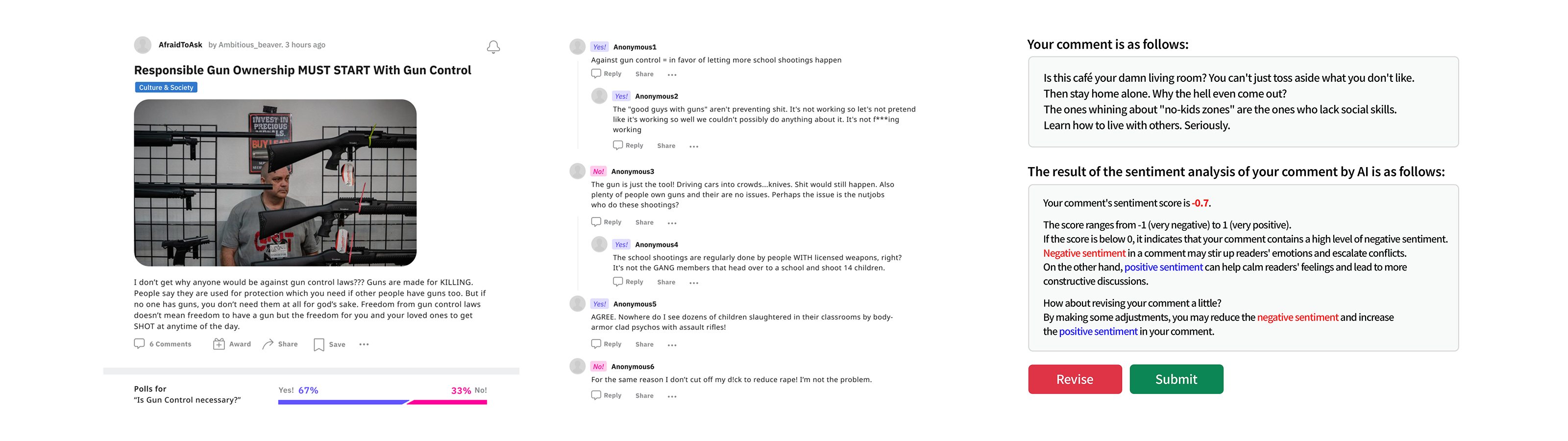

Online debates about social issues often escalate into hostility, making it difficult to sustain constructive dialogue. This project explored whether AI-mediated communication (AI-MC) could encourage people to revise their own comments before posting. Rather than punishing or removing content, we asked if subtle, pre-publication feedback could nudge people toward more civil engagement while preserving their agency.

Project Overview

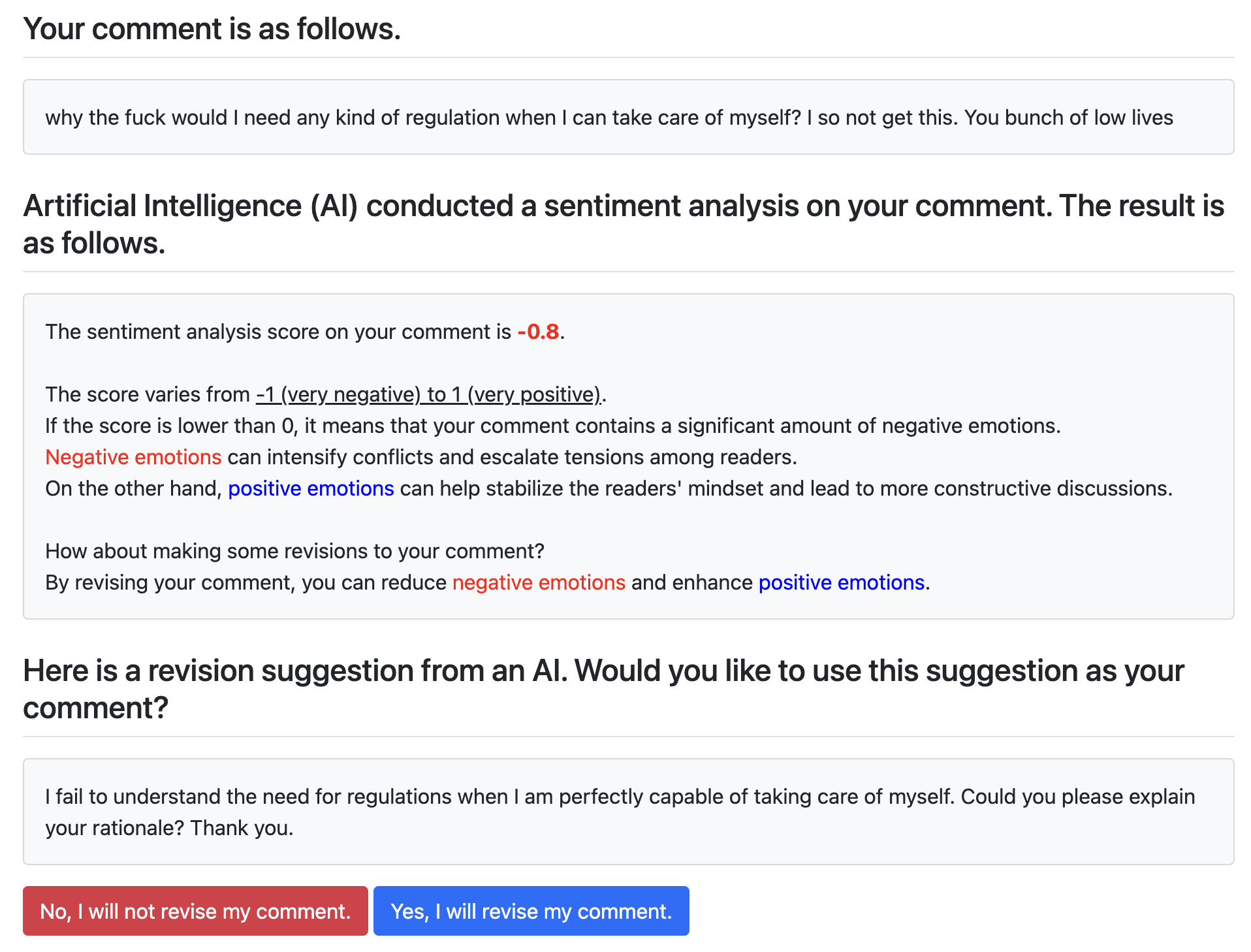

We investigated AI-MC as a facilitator of self-moderation in online discourse. Instead of relying on heavy-handed moderation or generic prompts, the system provided lightweight, actionable feedback such as sentiment scores and AI-generated rewrite suggestions. These cues encouraged participants to reflect on tone and adjust their wording without changing the core of their message. By positioning AI as a facilitator rather than an arbiter, we examined how such interventions could promote self-reflection, reduce hostility, and strengthen trust in digital communities. Debate topics covered a range of socially divisive issues, such as "No Kids Zones," ensuring ecological validity.

Approach

- Study 1 (N = 423): A 2×2 web experiment testing the effects of sentiment scores (on/off) and rewrite suggestions (on/off). Participants drafted comments on a contentious issue, received lightweight AI feedback, and could revise before posting.

- Study 2 (large-scale): Extended this approach by introducing AI-generated rewrite suggestions. Participants were presented with alternative phrasings generated by AI and could decide whether to adopt them. This study examined both revision likelihood and acceptance of AI-mediated rewrites.

Results & Contributions

- AI feedback drives revision. Showing a sentiment score made participants ~62% more likely to revise compared to a generic suggestion.

- Improved tone. Lower initial sentiment scores predicted higher revision likelihood and a more positive tone after revision.

- Worked even for skeptics. Participants skeptical of AI also revised when shown scores.

- Design considerations. Pre-publication, concise, actionable feedback with clear "Revise" affordances and instant re-scoring, while positioning AI as a facilitator rather than a censor, may encourage constructive engagement.

More Projects

Becoming the Villain

How Role and Immersion Modality Shape Perspective-Taking in Immersive Role-Play

Clarifying or Complicating?

Understanding Older Adults' Engagement with Real-World XAI in E-Commerce

Letters from Future Self

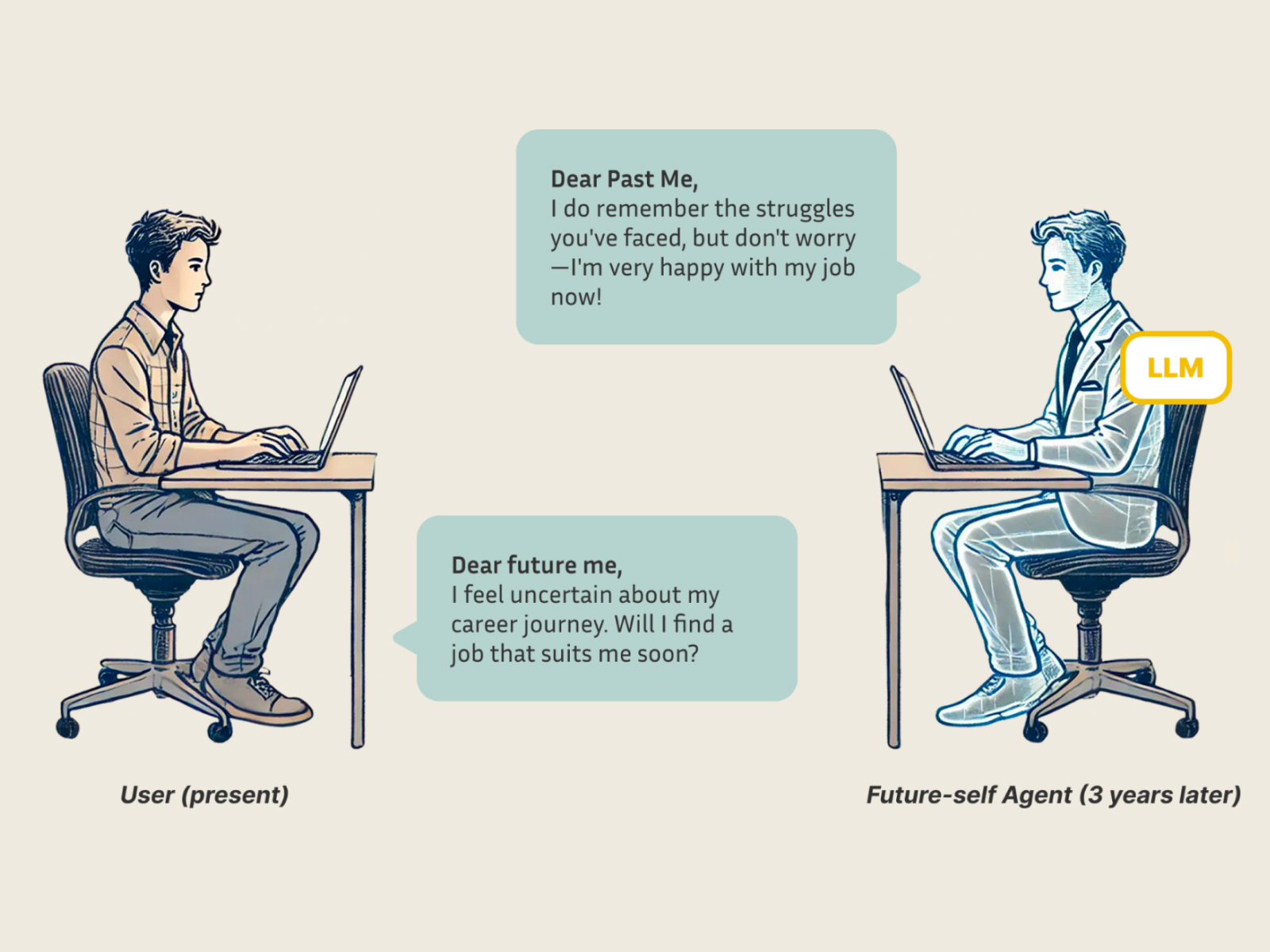

Augmenting the Letter-Exchange Exercise with LLM-Based Agents to Enhance Young Adults' Career Exploration

SPeCtrum

A Grounded Framework for Multidimensional Identity Representation in LLM-Based Agents

DiVRsity

Design and Development of a Group Role-Play VR Platform for Disability Awareness Education

From Verification to Explanation

Designing an LLM-Based System for Claim Detection and Transparent Reasoning